Kubernetes Upgrades, Done Right (Part 2): Technical Guide

A complete technical guide to automating, validating, and streamlining Kubernetes upgrades across EKS, AKS, and GKE — making upgrades fast, safe, and routine.

A complete technical guide to automating, validating, and streamlining Kubernetes upgrades across EKS, AKS, and GKE — making upgrades fast, safe, and routine.

Kubernetes upgrades often evolve into time-consuming, high-risk projects that strain platform and DevOps teams. This technical guide provides a step-by-step framework to automate and streamline upgrades, minimizing risk while improving operational efficiency across AWS EKS, Azure AKS, and Google GKE environments.

This technical guide builds on our Strategic Guide to Kubernetes Upgrades. While the strategic guide focuses on organizational transformation and business value, this article provides hands-on frameworks, tools, and best practices for platform teams to operationalize upgrades efficiently across EKS, AKS, and GKE.

Every successful Kubernetes upgrade strategy begins with the right toolkit. Below are essential tools that help transform upgrades from manual projects into automated, repeatable operations.

While individual tools are critical, building an enterprise-grade Kubernetes upgrade strategy also requires a broader toolchain across observability, automation, testing, and security.

1. GitOps Tools (ArgoCD/Flux)

2. CI/CD Pipelines (Jenkins/GitHub Actions/GitLab CI)

Infrastructure as Code forms the backbone of a reliable Kubernetes upgrade strategy. By defining your infrastructure in code, you create:

Using Infrastructure as Code (IaC) creates a consistent, auditable approach to Kubernetes cluster management. Here's an example of how to implement this with Terraform for EKS:

# EKS cluster configuration with managed node groups

module "eks" {

source = "terraform-aws-modules/eks/aws"

cluster_name = "my-cluster"

cluster_version = "1.30" # Target version

vpc_id = module.vpc.vpc_id

subnet_ids = module.vpc.private_subnets

# Managed node group with update configuration

eks_managed_node_groups = {

primary = {

name = "primary-node-group"

instance_types = ["m5.large"]

min_size = 2

max_size = 10

desired_size = 3

# Control maximum unavailable nodes during upgrades

update_config = {

max_unavailable_percentage = 25

}

ami_type = "AL2023_x86_64_STANDARD"

labels = { Environment = "production" }

}

}

# Keep add-ons current

cluster_addons = {

coredns = { most_recent = true }

kube-proxy = { most_recent = true }

vpc-cni = { most_recent = true }

}

}Each cloud provider has specific approaches to Kubernetes management:

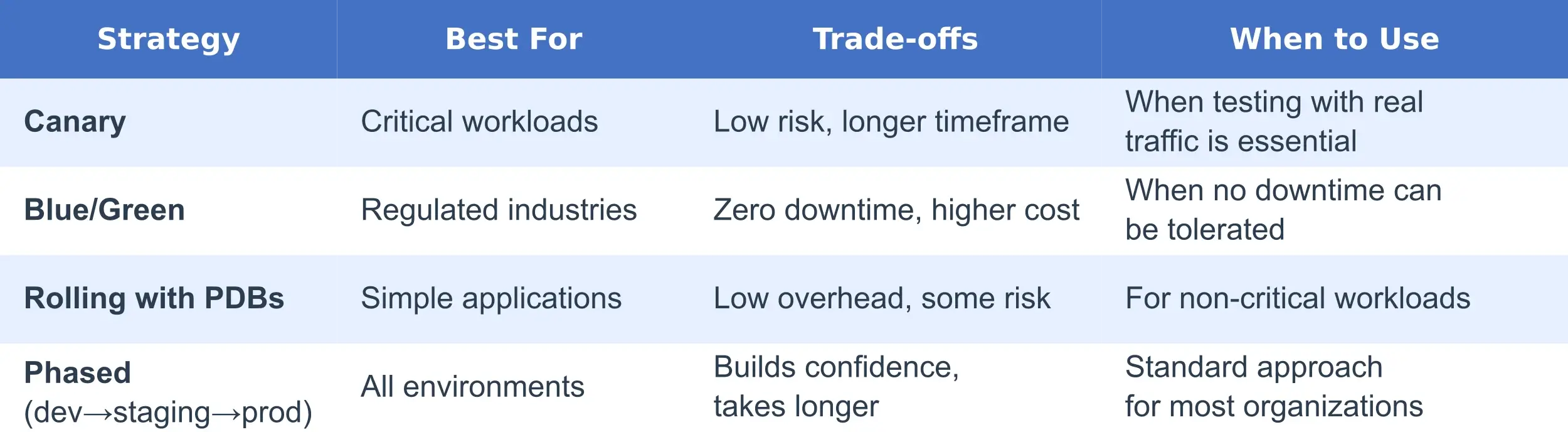

The technical approach to upgrades must balance risk mitigation with operational efficiency. Here are the key patterns to consider:

This approach involves creating a new node group with the upgraded Kubernetes version while maintaining your existing node groups. It's particularly effective for EKS, where node groups provide a natural boundary for canary testing.

Implementation steps:

For maximum safety in regulated environments or when major version jumps are necessary:

This approach eliminates in-place upgrade risks at the cost of additional infrastructure and coordination complexity.

For managed node groups in any cloud provider, Pod Disruption Budgets are essential to control workload availability during upgrades:

# Example PDB ensuring 70% minimum availability

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: app-pdb

spec:

minAvailable: 70%

selector:

matchLabels:

app: critical-serviceWhen combined with the appropriate node group configuration, PDBs ensure that enough pods remain available during the upgrade process to maintain service continuity.

Implementation in node group settings:

A robust testing framework transforms "hope-based" upgrades into confident, validated processes:

Before initiating any upgrade, run these essential validations:

1. API Compatibility Check:

# Use Pluto to identify deprecated APIs

pluto detect-all-in-cluster -o wide

# Look specifically for critical workloads

kubectl get deployments,statefulsets -A -o yaml | pluto detect -2. Security Validation:

# Run kube-bench against CIS benchmarks

kube-bench --benchmark cis-1.63. Cluster Health Assessment:

# Check control plane component health

kubectl get componentstatuses

# Verify all nodes are ready

kubectl get nodes

# Check for pending or failed pods

kubectl get pods --all-namespaces | grep -v Running4. Application Readiness:

# Ensure PDBs exist for critical workloads

kubectl get pdb --all-namespaces

# Verify horizontal pod autoscalers

kubectl get hpa --all-namespacesAfter the upgrade completes, implement comprehensive validation:

1. Core Kubernetes API Testing:

# Test creating temporary resources

kubectl run test-pod --image=busybox -- sleep 300

kubectl expose pod test-pod --port=80 --target-port=80802. Storage Validation:

# Test persistent volume provisioning

kubectl apply -f test-pvc.yaml

kubectl get pvc3. Application Testing:

# Verify all deployments are available

kubectl get deployments --all-namespaces

# Check statefulsets

kubectl get statefulsets --all-namespaces

# Run application-specific health checks

./run-app-tests.sh4. Smoke Testing:

After validating core Kubernetes resources and application health, it’s critical to run smoke tests that simulate real-world usage patterns.

Smoke testing helps catch hidden issues such as:

Example approach using K6 for quick smoke testing:

k6 run smoke-test.jsTip: Watch out for our upcoming comprehensive guide on using K6 for load and stress testing in Kubernetes environments!

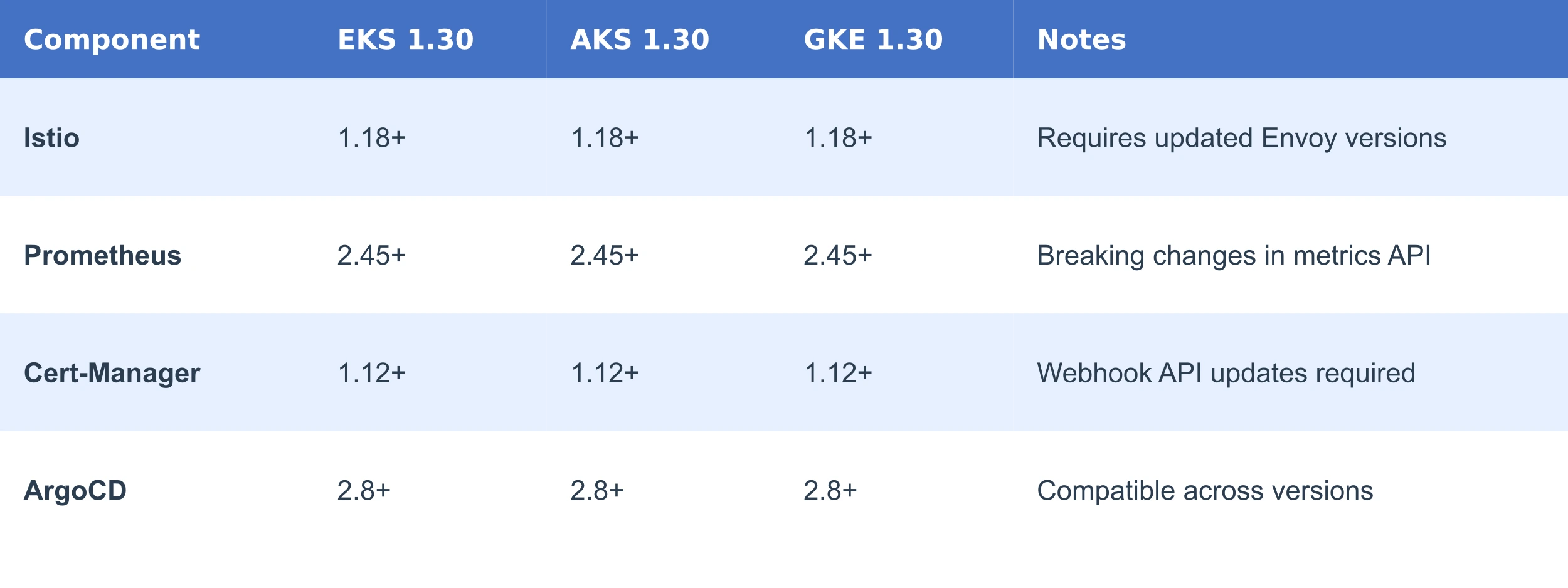

Managing Kubernetes version compatibility is critical to ensuring smooth upgrades and minimizing operational risks. This section provides a complete framework for handling version compatibility across the control plane, node groups, and workloads.

Different Kubernetes-related components, such as service meshes, monitoring tools, and certificate managers, often have strict version requirements tied to specific Kubernetes releases.

Here’s a sample compatibility matrix for common components with Kubernetes 1.30:

Before upgrading, ensure all critical workloads are compatible with the target Kubernetes version.

Each cloud provider enforces rules about how far apart the control plane and worker node versions can be:

Command to Check Node Version Skew:

kubectl get nodes -o custom-columns=NAME:.metadata.name,VERSION:.status.nodeInfo.kubeletVersion | sort -k2Here’s a simple policy reminder you should enforce in your cluster management:

Skew beyond the allowed limit can break cluster functionality or block upgrades.

Always be aware of the support lifecycle for each Kubernetes minor version on your cloud platform. Staying ahead of EOL deadlines ensures that you avoid forced upgrades, extended support fees, and security risks.

Tip: Plan upgrades at least 3–6 months before EOL to avoid rushed migrations or extended support fees.

Networking components are critical during Kubernetes upgrades.

CNI plugins must be compatible with the new Kubernetes version:

Amazon EKS:

Azure AKS:

Google GKE:

Always verify CNI versions and upgrade them before or immediately after node pool upgrades.

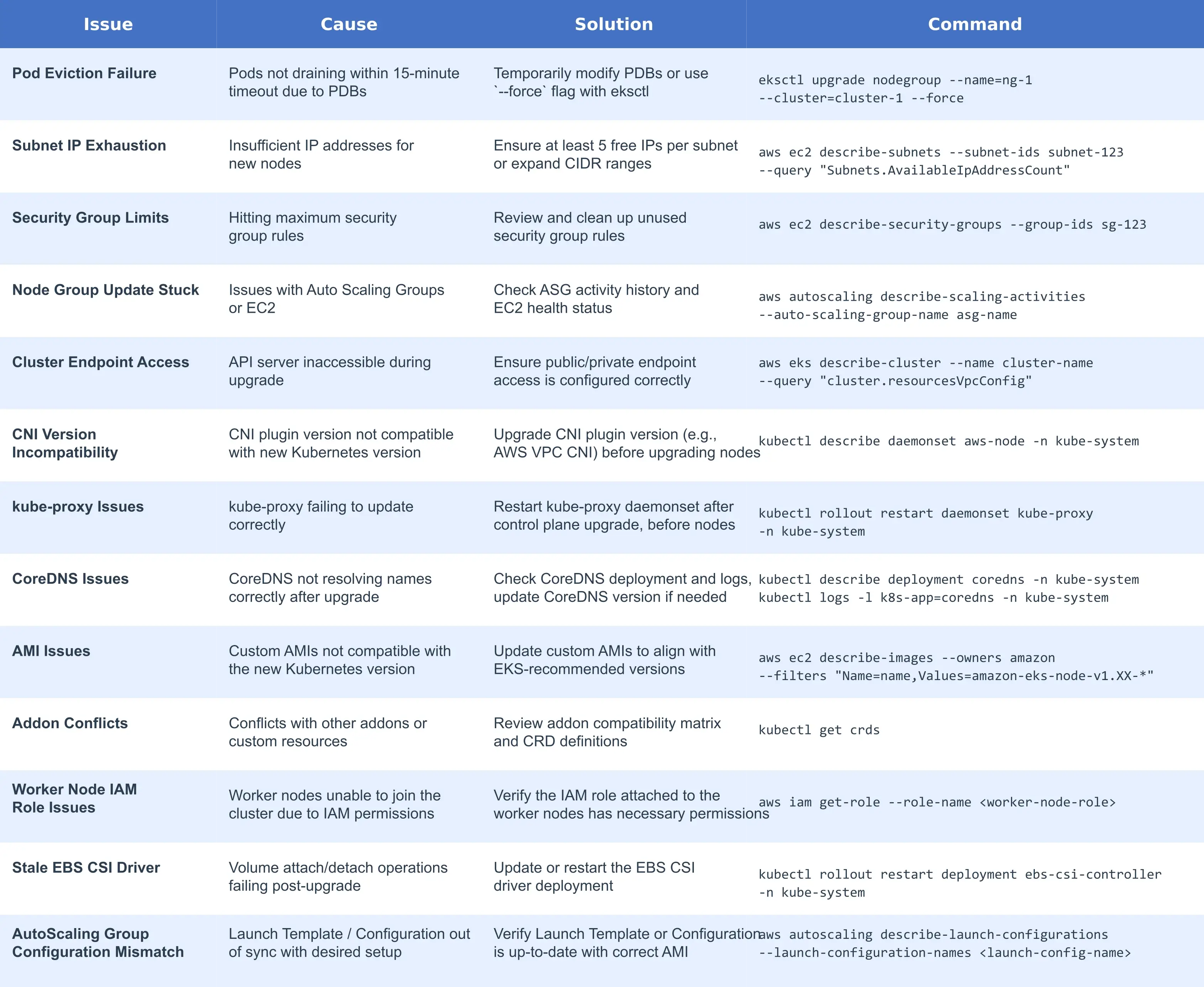

Even with careful planning, Kubernetes upgrades can encounter operational challenges. Below is a comprehensive guide to the most common cluster-specific issues, their root causes, recommended solutions, and key commands to resolve them quickly.

These are the most frequent problems that occur during Kubernetes upgrades within a single cluster environment, particularly in EKS, AKS, and GKE managed clusters.

When upgrading Kubernetes across different cloud providers, additional platform-agnostic issues can emerge. This table summarizes the most common cross-cloud upgrade problems and how to address them effectively.

The best approach is to prevent issues before they occur:

Looking ahead to Kubernetes 1.31 and beyond, prepare for these upcoming changes:

Kubernetes is removing in-tree cloud provider integrations. To prepare:

1. Test external cloud provider components in non-production

2. Update your Terraform configurations to include external controllers:

# AWS Cloud Controller Manager deployment

resource "kubernetes_deployment" "aws_cloud_controller_manager" {

metadata {

name = "aws-cloud-controller-manager"

namespace = "kube-system"

}

# Configuration details...

}Flags like --keep-terminated-pod-volumes are being removed. Audit your configurations:

# Check for usage in your configurations

grep -r "keep-terminated-pod-volumes" ./k8s-manifests/

# Review kubelet configurations

kubectl get cm -n kube-system kubelet-config -o yamlIn-tree volume plugins are moving to CSI implementations:

# Verify CSI drivers are installed

kubectl get pods -n kube-system | grep csi

# Check for StorageClass configurations using CSI

kubectl get storageclassBringing all the elements together, here's a comprehensive approach to making Kubernetes upgrades business-as-usual:

Implement a predictable upgrade schedule:

For most enterprises, a phased approach works best:

To truly transform Kubernetes upgrades from risky projects into routine operations, automation must be embedded across every stage of the upgrade lifecycle. The goal is simple: no manual steps, no guesswork, no surprises.

Focus on automating the following:

Discovery and Validation:

Backup and Recovery:

Infrastructure as Code (IaC):

Strategic Rollouts:

Testing Frameworks:

Monitoring and Observability:

Governance Integration:

By treating Kubernetes upgrades as a continuous, automated process rather than isolated projects, platform teams can dramatically reduce risk, improve cluster health, and drive faster innovation cycles. A robust upgrade strategy today is the foundation for operational excellence tomorrow. Start small, automate everything, and make upgrades boring — that's the goal.

A successful Kubernetes strategy relies on continuous learning and community engagement. Here are essential resources for platform engineering teams:

Looking for the business case and organizational strategies behind seamless Kubernetes upgrades?

Explore Part 1: Strategic Guide to complete the full playbook.

Ready to simplify your Kubernetes upgrades?

Book a Demo or Contact Us to learn how Aokumo can help automate and streamline your operations.